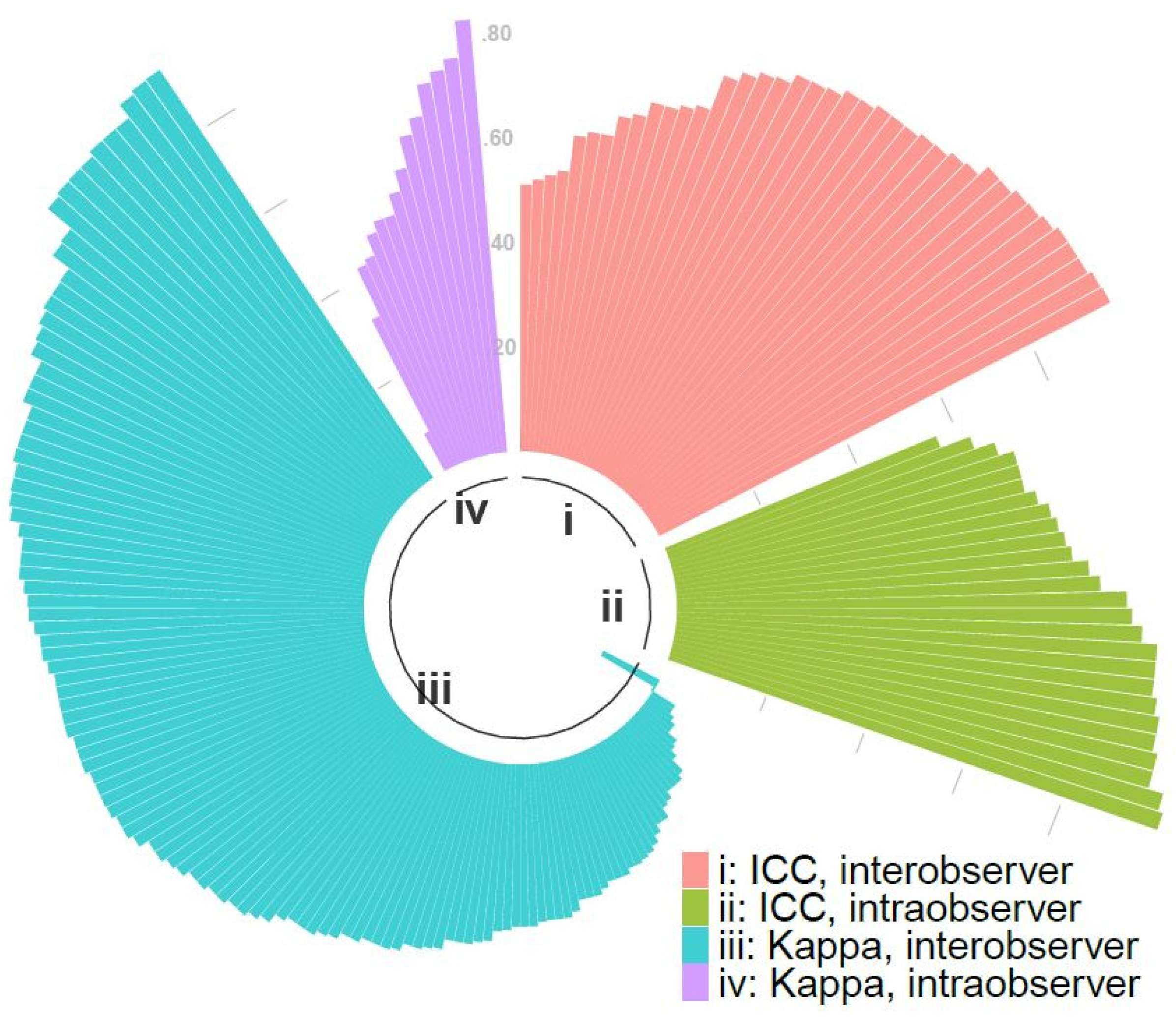

Diagnostics | Free Full-Text | Inter/Intra-Observer Agreement in Video-Capsule Endoscopy: Are We Getting It All Wrong? A Systematic Review and Meta-Analysis

Intra and Interobserver Reliability and Agreement of Semiquantitative Vertebral Fracture Assessment on Chest Computed Tomography | PLOS ONE

Inter- and Intra-observer Variability in Biopsy of Bone and Soft Tissue Sarcomas | Anticancer Research

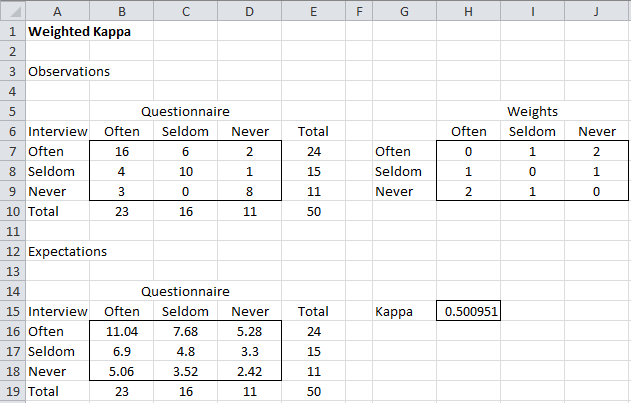

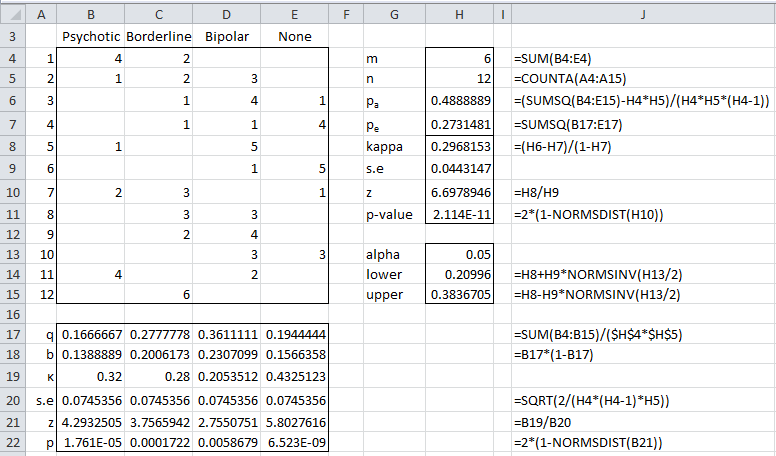

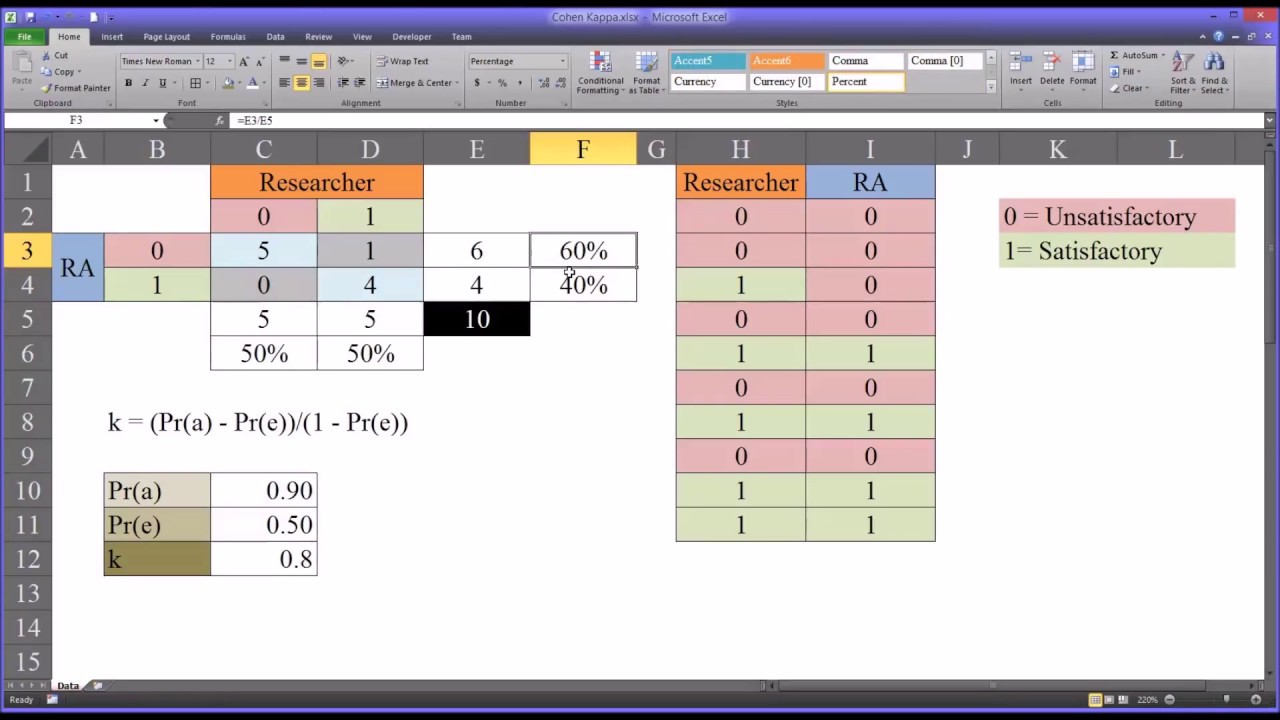

Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download